An application that truly understands a natural language is something sci-fi enthusiasts, programmers, and AI researchers have dreamed about for decades. Today, thanks to large advances in machine learning technologies, that dream is closer than ever to becoming a reality. What’s more, cloud-based services such as Google Cloud Machine Learning have made those technologies freely available for everyone to use.

In this tutorial, you’ll learn how to use two powerful natural language-oriented APIs offered by the Google Cloud Machine Learning platform: Cloud Speech API and Cloud Natural Language API. By using them together, you can create apps that can handle speech in a variety of widely spoken languages.

Prerequisites

To follow along, you’ll need:

- Android Studio 2.2 or higher

- a Google Cloud Platform account

- a device that runs Android 4.4 or higher

1. Why Use These APIs?

An application that can process speech must have the following capabilities:

- It must be able to extract individual words from raw audio data.

- It must be able to make educated guesses about the grammatical relationships between the words it has extracted.

The Cloud Speech and Cloud Natural Language APIs enable you to add the above capabilities to your Android app in a matter of minutes.

The Cloud Speech API serves as a state-of-the-art speech recognizer that can accurately transcribe speech in over 80 languages. It can also robustly handle regional accents and noisy conditions.

On a similar note, the Cloud Natural Language API is a language processing system that can, with near-human level accuracy, determine the roles words play in sentences given to it. It currently supports ten languages, and it also offers entity and sentiment analysis.

2. Enabling the APIs

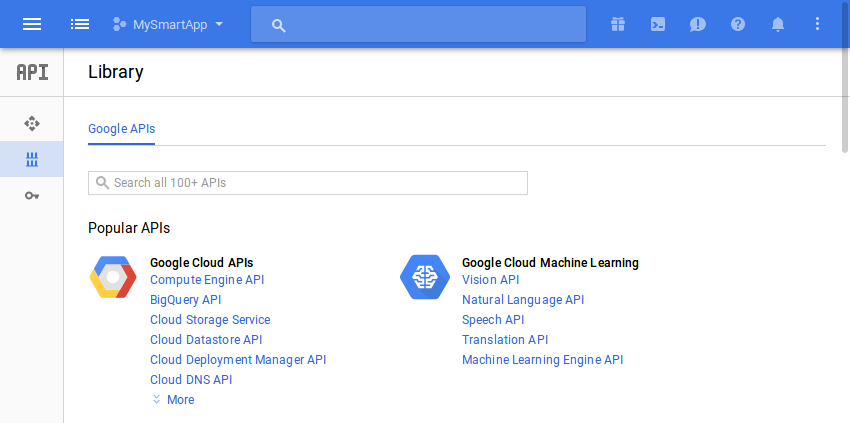

Before you use the Speech and Natural Language APIs, you must enable them in the Google Cloud console. So log in to the console and navigate to API Manager > Library.

To enable the Speech API, click on the Speech API link in the Google Cloud Machine Learning section. In the page that opens next, press the Enable button.

Press your browser’s back button to return to the previous page.

This time, enable the Natural Language API by clicking on the Natural Language API link and pressing the Enable button on the next page.

You’ll need an API key while interacting with the APIs. If you don’t have one already, open the Credentials tab, press the Create credentials button, and choose API key.

You’ll now see a pop-up displaying your API key. Note it down so you can use it later.

3. Configuring Your Project

Both the APIs are JSON-based and have REST endpoints you can interact with directly using any networking library. However, you can save a lot of time—and also write more readable code—by using the Google API Client libraries available for them. So open the build.gradle file of your project’s app module and add the following compile dependencies to it:

compile 'com.google.api-client:google-api-client-android:1.22.0' compile 'com.google.apis:google-api-services-speech:v1beta1-rev336-1.22.0' compile 'com.google.apis:google-api-services-language:v1beta2-rev6-1.22.0' compile 'com.google.code.findbugs:jsr305:2.0.1'

Additionally, we’ll be performing a few file I/O operations in this tutorial. To simplify them, add a compile dependency for the Commons IO library.

compile 'commons-io:commons-io:2.5'

Lastly, don’t forget to request for the INTERNET permission in the AndroidManifest.xml file. Without it, your app won’t be able to connect to Google’s servers.

4. Using the Cloud Speech API

It goes without saying that the Cloud Speech API expects audio data as one of its inputs. Therefore, we’ll now be creating an Android app that can transcribe audio files.

To keep it simple, we’ll only be transcribing FLAC files, files that use the Free Lossless Audio Codec encoding format. You might already have such files on your device. If you don’t, I suggest you download a few from Wikimedia Commons.

Step 1: Create a Layout

Our app’s layout will have a Button widget users can press to display a file picker, an interface where they can browse through and select audio files available on their devices.

The layout will also have a TextView widget to display the transcript of the selected audio file. Accordingly, add the following code to your activity’s layout XML file:

Step 2: Create a File Picker

The file picker interface should be displayed when the user presses the button we created in the previous step, so associate an OnClickListener object with it. Before you do so, make sure you initialize the button using the findViewById() method.

Button browseButton = (Button)findViewById(R.id.browse_button);

browseButton.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View view) {

// More code here

}

});

With Android’s Storage Access Framework, which is available on devices running API level 19 or higher, creating a file picker takes very little effort. All you need to do is create an intent for the ACTION_GET_CONTENT action and pass it to the startActivityForResult() method. Optionally, you can restrict the file picker to display only FLAC files by passing the appropriate MIME type to the setType() method of the Intent object.

Intent filePicker = new Intent(Intent.ACTION_GET_CONTENT);

filePicker.setType("audio/flac");

startActivityForResult(filePicker, 1);

The output of the file picker will be another Intent object containing the URI of the file the user selected. To be able to access it, you must override the onActivityResult() method of your Activity class.

@Override

protected void onActivityResult(int requestCode, int resultCode,

Intent data) {

super.onActivityResult(requestCode, resultCode, data);

if(resultCode == RESULT_OK) {

final Uri soundUri = data.getData();

// More code here

}

}

Step 3: Encode the File

The Cloud Speech API expects its audio data to be in the form of a Base64 string. To generate such a string, you can read the contents of the file the user selected into a byte array and pass it to the encodeBase64String() utility method offered by the Google API Client library.

However, you currently have only the URI of the selected file, not its absolute path. That means that, to be able to read the file, you must resolve the URI first. You can do so by passing it to the openInputStream() method of your activity’s content resolver. Once you have access to the input stream of the file, you can simply pass it to the toByteArray() method of the IOUtils class to convert it into an array of bytes. The following code shows you how:

AsyncTask.execute(new Runnable() {

@Override

public void run() {

InputStream stream = getContentResolver()

.openInputStream(soundUri);

byte[] audioData = IOUtils.toByteArray(stream);

stream.close();

String base64EncodedData =

Base64.encodeBase64String(audioData);

// More code here

}

}

As you can see in the above code, we are using a new thread to run all the I/O operations. Doing so is important in order to make sure that the app’s UI doesn’t freeze.

Step 4: Play the File

In my opinion, playing the sound file that is being transcribed, while it is being transcribed, is a good idea. It doesn’t take much effort, and it improves the user experience.

You can use the MediaPlayer class to play the sound file. Once you point it to the URI of the file using its setDataSource() method, you must call its prepare() method to synchronously prepare the player. When the player is ready, you can call its start() method to start playing the file.

Additionally, you must remember to release the player’s resources once it has completed playing the file. To do so, assign an OnCompletionListener object to it and call its release() method. The following code shows you how:

MediaPlayer player = new MediaPlayer();

player.setDataSource(MainActivity.this, soundUri);

player.prepare();

player.start();

// Release the player

player.setOnCompletionListener(

new MediaPlayer.OnCompletionListener() {

@Override

public void onCompletion(MediaPlayer mediaPlayer) {

mediaPlayer.release();

}

});

Step 5: Synchronously Transcribe the File

At this point, we can send the Base64-encoded audio data to the Cloud Speech API to transcribe it. But first, I suggest that you store the API key you generated earlier as a member variable of your Activity class.

private final String CLOUD_API_KEY = "ABCDEF1234567890";

To be able to communicate with the Cloud Speech API, you must create a Speech object using a Speech.Builder instance. As arguments, its constructor expects an HTTP transport and a JSON factory. Additionally, to make sure that the API key is included in every HTTP request to the API, you must associate a SpeechRequestInitializer object with the builder and pass the key to it.

The following code creates a Speech object using the AndroidJsonFactory class as the JSON factory and the NetHttpTransport class as the HTTP transport:

Speech speechService = new Speech.Builder(

AndroidHttp.newCompatibleTransport(),

new AndroidJsonFactory(),

null

).setSpeechRequestInitializer(

new SpeechRequestInitializer(CLOUD_API_KEY))

.build();

The Cloud Speech API must be told what language the audio file contains. You can do so by creating a RecognitionConfig object and calling its setLanguageCode() method. Here’s how you configure it to transcribe American English only:

RecognitionConfig recognitionConfig = new RecognitionConfig();

recognitionConfig.setLanguageCode("en-US");

Additionally, the Base64-encoded string must be wrapped in a RecognitionAudio object before it can be used by the API.

RecognitionAudio recognitionAudio = new RecognitionAudio(); recognitionAudio.setContent(base64EncodedData);

Next, using the RecognitionConfig and RecognitionAudio objects, you must create a SyncRecognizeRequest object. As its name suggests, it allows you to create an HTTP request to synchronously transcribe audio data. Once the object has been created, you can pass it as an argument to the syncrecognize() method and call the resulting Speech.SpeechOperations.Syncrecognize object’s execute() method to actually execute the HTTP request.

The return value of the execute() method is a SyncRecognizeResponse object, which may contain several alternative transcripts. For now, we’ll be using the first alternative only.

// Create request

SyncRecognizeRequest request = new SyncRecognizeRequest();

request.setConfig(recognitionConfig);

request.setAudio(recognitionAudio);

// Generate response

SyncRecognizeResponse response = speechService.speech()

.syncrecognize(request)

.execute();

// Extract transcript

SpeechRecognitionResult result = response.getResults().get(0);

final String transcript = result.getAlternatives().get(0)

.getTranscript();

Finally, to display the transcript to the user, you can pass it to the TextView widget. Of course, because changes to the user interface must always happen on the UI thread, make sure you do so after calling your activity’s runOnUiThread() method.

runOnUiThread(new Runnable() {

@Override

public void run() {

TextView speechToTextResult =

(TextView)findViewById(R.id.speech_to_text_result);

speechToTextResult.setText(transcript);

}

});

You can now run your app, select a FLAC file containing speech in American English, and see the Cloud Speech API generate a transcript for it.

It’s worth mentioning that the Cloud Speech API can currently process only single-channel audio files. If you send a file with multiple channels to it, you will get an error response.

5. Using the Cloud Natural Language API

Now that we have a transcript, we can pass it to the Cloud Natural Language API to analyze it. To keep this tutorial short, we’ll only be running entity and sentiment analysis on the transcript. In other words, we are going to determine all the entities that are mentioned in the transcript, such as people, places, and professions, and also tell if its overall sentiment is negative, neutral, or positive.

Step 1: Update the Layout

To allow the user to start the analysis, our layout must contain another Button widget. Therefore, add the following code to your activity’s layout XML file:

Step 2: Annotate the Transcript

The Cloud Natural Language REST API offers a convenience option called annotateText that allows you to run both sentiment and entity analysis on a document with just one HTTP request. We’ll be using it to analyze our transcript.

Because the analysis must begin when the user presses the button we created in the previous step, add an OnClickListener to it.

Button analyzeButton = (Button)findViewById(R.id.analyze_button);

analyzeButton.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View view) {

// More code here

}

});

To interact with the API using the Google API Client library, you must create a CloudNaturalLanguage object using the CloudNaturalLanguage.Builder class. Its constructor also expects an HTTP transport and a JSON factory.

Furthermore, by assigning a CloudNaturalLanguageRequestInitializer instance to it, you can force it to include your API key in all its requests.

final CloudNaturalLanguage naturalLanguageService =

new CloudNaturalLanguage.Builder(

AndroidHttp.newCompatibleTransport(),

new AndroidJsonFactory(),

null

).setCloudNaturalLanguageRequestInitializer(

new CloudNaturalLanguageRequestInitializer(CLOUD_API_KEY)

).build();

All the text you want to analyze using the API must be placed inside a Document object. The Document object must also contain configuration information, such as the language of the text and whether it is formatted as plain text or HTML. Accordingly, add the following code:

String transcript =

((TextView)findViewById(R.id.speech_to_text_result))

.getText().toString();

Document document = new Document();

document.setType("PLAIN_TEXT");

document.setLanguage("en-US");

document.setContent(transcript);

Next, you must create a Features object specifying the features you are interested in analyzing. The following code shows you how to create a Features object that says you want to extract entities and run sentiment analysis only.

Features features = new Features(); features.setExtractEntities(true); features.setExtractDocumentSentiment(true);

You can now use the Document and Features objects to compose an AnnotateTextRequest object, which can be passed to the annotateText() method to generate an AnnotateTextResponse object.

final AnnotateTextRequest request = new AnnotateTextRequest();

request.setDocument(document);

request.setFeatures(features);

AsyncTask.execute(new Runnable() {

@Override

public void run() {

AnnotateTextResponse response =

naturalLanguageService.documents()

.annotateText(request).execute();

// More code here

}

}

Note that we are generating the response in a new thread because network operations aren’t allowed on the UI thread.

You can extract a list of entities from the AnnotateTextResponse object by calling its getEntities() method. Similarly, you can extract the overall sentiment of the transcript by calling the getDocumentSentiment() method. To get the actual score of the sentiment, however, you must also call the getScore() method, which returns a float.

As you might expect, a sentiment score equal to zero means the sentiment is neutral, a score greater than zero means the sentiment is positive, and a score less than zero means the sentiment is negative.

What you do with the list of entities and the sentiment score is, of course, up to you. For now, let’s just display them both using an AlertDialog instance.

final ListentityList = response.getEntities(); final float sentiment = response.getDocumentSentiment().getScore(); runOnUiThread(new Runnable() { @Override public void run() { String entities = ""; for(Entity entity:entityList) { entities += "n" + entity.getName().toUpperCase(); } AlertDialog dialog = new AlertDialog.Builder(MainActivity.this) .setTitle("Sentiment: " + sentiment) .setMessage("This audio file talks about :" + entities) .setNeutralButton("Okay", null) .create(); dialog.show(); } });

With the above code, the sentiment score will be displayed in the title of the dialog, and the list of entities will be displayed in its body.

If you run the app now, you should be able to analyze the content of audio files, as well as transcribing them.

Conclusion

You now know how to use the Cloud Speech and Cloud Natural Language APIs together to create an Android app that can not only transcribe an audio file but also run entity and sentiment analysis on it. In this tutorial, you also learned how to work with Android’s Storage Access Framework and Google Client API libraries.

Google has been regularly adding new and interesting features—along with support for more languages—to both the APIs. To stay updated about them, do refer to the official documentation.

And while you’re here, check out some of our other posts on mobile app cloud services and machine learning!

Android SDKServerless Apps With Firebase Cloud Functions

Android SDKServerless Apps With Firebase Cloud Functions Android ThingsAndroid Things and Machine Learning

Android ThingsAndroid Things and Machine Learning Android SDKHow to Use Google Cloud Machine Learning Services for Android

Android SDKHow to Use Google Cloud Machine Learning Services for Android