Firebase ML Kit, a collection of local and cloud-based APIs for adding machine learning capabilities to mobile apps, has recently been enhanced to support face contour detection. Thanks to this powerful feature, you no longer have to limit yourself to approximate rectangles while detecting faces. Instead, you can work with a large number of coordinates that accurately describe the shapes of detected faces and facial landmarks, such as eyes, lips, and eyebrows.

This allows you to easily create AI-powered apps that can do complex computer vision related tasks such as swapping faces, recognizing emotions, or applying digital makeup.

In this tutorial, I’ll show you how to use ML Kit’s face contour detection feature to create an Android app that can highlight faces in photos.

Prerequisites

To make the most of this tutorial, you must have access to the following:

- the latest version of Android Studio

- a device running Android API level 23 or higher

1. Configuring Your Project

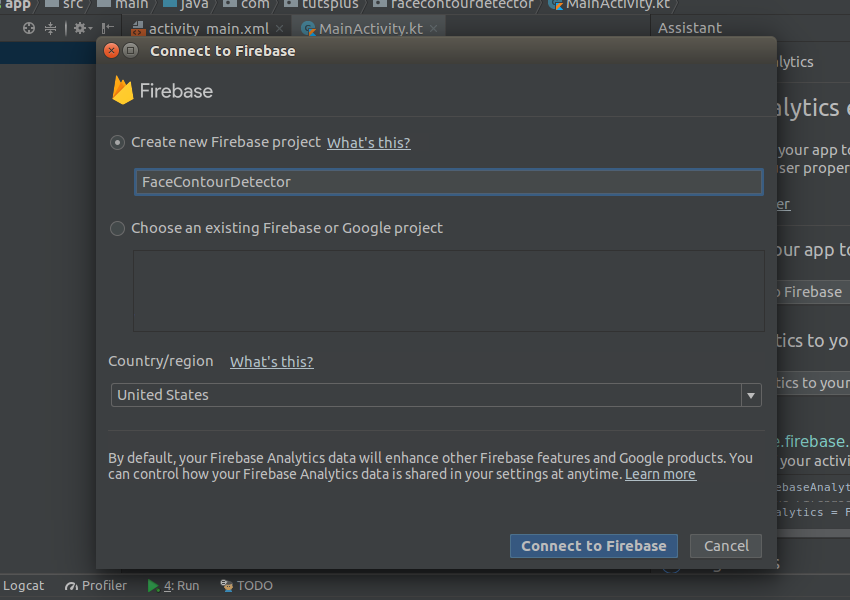

Because ML Kit is a part of the Firebase platform, you’ll need a Firebase project to be able to use it in your Android Studio project. To create one, fire up the Firebase Assistant by going to Tools > Firebase.

Next, open the Analytics section and press the Connect button. In the dialog that pops up, type in a name for your new Firebase project, select the country you are in, and press the Connect button.

Once you have a successful connection, press the Add analytics to your app button so that the assistant can make all the necessary Firebase-related configuration changes in your Android Studio project.

At this point, if you open your app module’s build.gradle file, among other changes, you should see the following implementation dependency present in it:

implementation 'com.google.firebase:firebase-core:16.0.4'

To be able to use ML Kit’s face contour detection feature, you’ll need two more dependencies: one for the latest version of the ML Vision library and one for the ML Vision face model. Here’s how you can add them:

implementation 'com.google.firebase:firebase-ml-vision:18.0.1' implementation 'com.google.firebase:firebase-ml-vision-face-model:17.0.2'

In this tutorial, you’ll be working with remote images. To facilitate downloading and displaying such images, add a dependency for the Picasso library:

implementation 'com.squareup.picasso:picasso:2.71828'

ML Kit’s face contour detection always runs locally on your user’s device. By default, the machine learning model that does the face contour detection is automatically downloaded the first time the user opens your app. To improve the user experience, however, I suggest you start the download as soon as the user installs your app. To do so, add the following

2. Creating the Layout

You’re going to need three widgets in your app’s layout: an EditText widget where the user can type in the URL of an online photo, an ImageView widget to display the photo, and a Button widget to start the face contour detection process. Additionally, you’ll need a RelativeLayout widget to position the three widgets. So add the following code to your main activity’s layout XML file:

3. Downloading and Displaying Images

With the Picasso library, downloading and displaying a remote image involves calls to just two methods. First, a call to the load() method to specify the URL of the image you want to download, and then a call to the into() method to specify the ImageView widget inside which you want to display the downloaded image.

You must, of course, call both the methods only after the user has finished typing in the URL. Therefore, make sure you call them inside an OnEditorActionListener object attached to the EditText widget you created in the previous step. The following code shows you how to do so:

user_input.setOnEditorActionListener { _, action, _ ->

if(action == EditorInfo.IME_ACTION_GO) {

Picasso.get()

.load(user_input.text.toString())

.into(photo)

true

}

false

}

Run the app now and try typing in a valid image URL to make sure it’s working correctly.

4. Creating a Face Contour Detector

You’ll be running all the face contour detection operations inside an on-click event handler attached to the Button widget of your layout. So add the following code to your activity before you proceed:

action_button.setOnClickListener {

// Rest of the code goes here

}

To be able to work with face data, you must now create a FirebaseVisionFaceDetector object. However, because it doesn’t detect the contours of faces by default, you must also create a FirebaseVisionFaceDetectorOptions object that can configure it to do so.

To create a valid options object, you must follow the builder pattern. So create an instance of the FirebaseVisionFaceDetectorOptions.Builder class, call its setContourMode() method, and pass the ALL_CONTOURS constant to it to specify that you want to detect the contours of all the faces present in your images.

Then call the build() method of the builder to generate the options object.

val detectorOptions =

FirebaseVisionFaceDetectorOptions.Builder()

.setContourMode(

FirebaseVisionFaceDetectorOptions.ALL_CONTOURS

).build()

You can now pass the options object to the getVisionFaceDetector() method of ML Kit’s FirebaseVision class to create your face contour detector.

val detector = FirebaseVision

.getInstance()

.getVisionFaceDetector(detectorOptions)

5. Collecting Coordinate Data

The face contour detector cannot directly use the photo being displayed by your ImageView widget. Instead, it expects you to pass a FirebaseVisionImage object to it. To generate such an object, you must convert the photo into a Bitmap object. The following code shows you how to do so:

val visionImage = FirebaseVisionImage.fromBitmap(

(photo.drawable as BitmapDrawable).bitmap

)

You can now call the detectInImage() method of the detector to detect the contours of all the faces present in the photo. The method runs asynchronously and returns a list of FirebaseVisionFace objects if it completes successfully.

detector.detectInImage(visionImage).addOnSuccessListener {

// More code here

}

Inside the on-success listener, you can use the it implicit variable to loop through the list of detected faces. Each face has a large number of contour points associated with it. To get access to those points, you must call the getContour() method. The method can return the contour points of several different facial landmarks. For instance, if you pass the constant LEFT_EYE to it, it will return the points you’ll need to outline the left eye. Similarly, if you pass UPPER_LIP_TOP to it, you’ll get the points associated with the top edge of the upper lip.

In this tutorial, we’ll be using the FACE constant because we want to highlight the face itself. The following code shows you how to print the X and Y coordinates of all points present along the edges of each face:

it.forEach {

val contour = it.getContour(FirebaseVisionFaceContour.FACE)

contour.points.forEach {

println("Point at ${it.x}, ${it.y}")

}

// More code here

}

If you try using the app now and specify an image that has at least one face in it, you should see something like this in the Logcat window:

6. Drawing Paths Around Faces

To highlight detected faces, let’s simply draw paths around them using the contour points. To be able to draw such paths, you’ll need a mutable copy of your ImageView widget’s bitmap. Create one by calling its copy() method.

val mutableBitmap =

(photo.drawable as BitmapDrawable).bitmap.copy(

Bitmap.Config.ARGB_8888, true

)

Drawing the paths by directly modifying the pixels of the bitmap can be hard. So create a new 2D canvas for it by passing it to the constructor of the Canvas class.

Additionally, create a Paint object to specify the color of the pixels you want to draw on the canvas. The following code shows you how to create a canvas on which you can draw translucent red pixels:

val canvas = Canvas(mutableBitmap)

val myPaint = Paint(Paint.ANTI_ALIAS_FLAG)

myPaint.color = Color.parseColor("#99ff0000")

The easiest way to draw paths on a canvas is to use the Path class. By using the intuitively named moveTo() and lineTo() methods of the class, you can easily draw complex shapes on the canvas.

For now, to draw the shape of a face, call the moveTo() method once and pass the coordinates of the first contour point to it. By doing so, you specify where the path begins. Then pass the coordinates of all the points to the lineTo() method to actually draw the path. Lastly, call the close() method to close the path and fill it.

Accordingly, add the following code:

val path = Path()

path.moveTo(contour.points[0].x, contour.points[0].y)

contour.points.forEach {

path.lineTo(it.x, it.y)

}

path.close()

To render the path, pass it to the drawPath() method of the canvas, along with the Paint object.

canvas.drawPath(path, myPaint)

And to update the ImageView widget to display the modified bitmap, pass the bitmap to its setImageBitmap() method.

photo.setImageBitmap(mutableBitmap)

If you run the app now, you should be able to see it draw translucent red masks over all the faces it detects.

Conclusion

With ML Kit’s new face contour detection API and a little bit of creativity, you can easily create AI-powered apps that can do complex computer vision related tasks such as swapping faces, detecting emotions, or applying digital makeup. In this tutorial, you learned how to use the 2D coordinates the API generates to draw shapes that highlight the faces present in a photo.

The face contour detection API can handle a maximum of five faces in a photo. For maximum speed and accuracy, however, I suggest you use it only with photos that have one or two faces.

To learn more about the API, do refer to the official documentation.

Powered by WPeMatico