Apple has been working on augmented reality for the last couple of years, and this year, at WWDC18, Apple released their latest version of their AR framework: ARKit 2. This year’s release is jam-packed with new features, and in this article, we’ll look at what’s new in ARKit 2.

At a Glance

Before we dive into the changes, let’s take a moment to review what ARKit actually is. ARKit is a framework which allows developers to create immersive augmented reality apps for iOS devices. With this framework, developers no longer need expensive hardware for realistic AR, but instead, they just need the built-in camera of their iOS device.

How Does It Work?

Because ARKit operates only using the built-in camera and sensors of your iOS device, it requires sophisticated software to make virtual objects appear lifelike in the scene. It uses horizontal plane detection, feature points, and light estimation to give virtual objects realistic characteristics.

What Sensors Does It Use?

ARKit is developed by Apple, so obviously, it runs on their devices—more specifically, iOS devices. These mobile devices contain certain sensors which ARKit takes full advantage of when placing objects in the real world. A built-in accelerometer and a camera can be found on iOS devices, and ARKit uses a combination of these two components for augmented reality.

1. Persisting World Maps

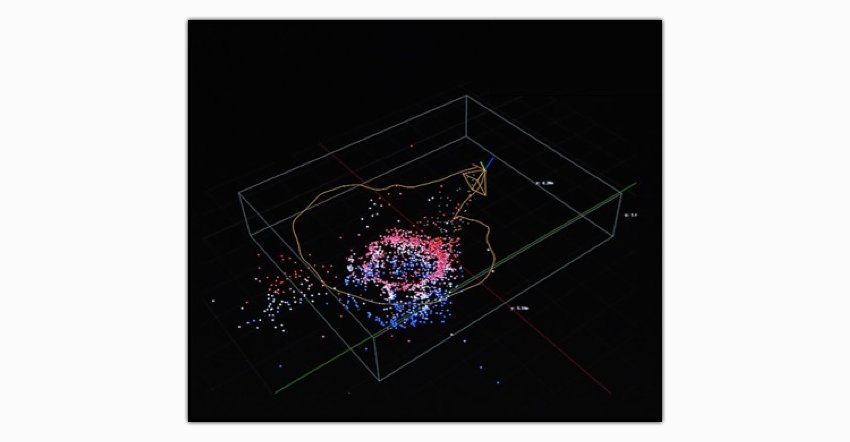

The session state in a world map includes ARKit’s awareness of the physical space the user moves the device in (which ARKit uses to determine the device’s position and orientation), as well as any ARAnchor objects added to the session (which can represent detected real-world features or virtual content placed by your app). —Apple Documentation

According to Apple’s definition, a world map includes anchors, objects, and other features which ARKit uses to remain aware of the space around the user. In the current version of ARKit, these maps are created at the start of the session and removed at the end.

ARKit 2 brings the ability to persist these world maps, which unlocks the ability to share these experiences with others or save them for use in the same application later.

Stored Maps

Persistent world maps mean you can retain user progress and allow the user to quickly start back right where they left off. This opens possibilities such as block-building games in which the user completes the game in a series of steps or levels and not just in one session.

Multi-User Experiences

With the ability to store and share world maps, two devices can track the same world map, which enables multi-user gaming and other shared augmented reality experiences. For example, you can play a virtual tower-smashing game with multiple devices.

2. Environment Texturing

Environment textures are cube-map textures that depict the view in all directions from a specific point in a scene. In 3D asset rendering, environment textures are the basis for image-based lighting algorithms where surfaces can realistically reflect light from their surroundings. ARKit can generate environment textures during an AR session using camera imagery, allowing SceneKit or a custom rendering engine to provide realistic image-based lighting for virtual objects in your AR experience. —Apple Documentation

When using augmented reality, it’s important to make objects blend in with the environment around them. In the first version of ARKit, features such as ambient light detection attempted to make the virtual object “fit in” with the scene.

ARKit 2 allows objects to reflect the textures around them. For example, if a shiny virtual ball is placed next to a fruit platter, you will be able to see the reflection of that fruit on the ball, and the lighting of virtual objects is no longer staged, but instead, it’s image-based.

3. 3D Object Recognition

One way to build compelling AR experiences is to recognize features of the user’s environment and use them to trigger the appearance of virtual content. For example, a museum app might add interactive 3D visualizations when the user points their device at a displayed sculpture or artifact.—Apple Documentation

Three-dimensional objects are at the core of ARKit, aren’t they? The latest version of this framework brings something which wasn’t ever possible before: the ability to scan and recognize these objects.

Museum Exhibits

ARKit 2 unlocks the ability for museums or similar organizations to “scan” their exhibits and allow information panels to appear above the statue. This can save valuable space by eliminating physical info-boards and allowing information to be quickly updated.

Action Figures

Action figure enthusiasts can scan their collection to input information or make their action figures come to life. At WWDC18, we saw the LEGO app allowing for LEGO sets to come to life and allow for multiplayer experiences.

4. Face Tracking

A face tracking configuration detects the user’s face in view of the device’s front-facing camera. When running this configuration, an AR session detects the user’s face (if visible in the front-facing camera image) and adds to its list of anchors an ARFaceAnchor object representing the face. Each face anchor provides information about the face’s position and orientation, its topology, and features that describe facial expressions.—Apple Documentation

With the introduction of the iPhone X, we saw Face ID and Animoji for the first time on iOS platforms, and during WWDC18, Apple expanded upon this and added a more basic version of facial recognition using only the camera (Face ID uses an IR dot projector, too).

In ARKit 2, the face’s position in space, the shape, and the facial expression can be determined. This information can be used to create “smart-filters” or other apps which make use of the face.

5. USDZ File Format

Apple is introducing a new open file format, usdz, which is optimized for sharing in apps like Messages, Safari, Mail, Files and News, while retaining powerful graphics and animation features. Using usdz, Quick Look for AR also allows users to place 3D objects into the real world to see how something would work in a space.—Apple Documentation

In collaboration with Pixar, Apple introduced a brand new file format for 3D objects in ARKit 2. This allows these virtual objects to be shared using a single open, compact format with others, and can even be embedded on web pages.

Quick Look

In websites, text messages, or emails, USDZ files can be shared and instantly placed into the real world to preview and sample 3D objects with the tap of the “quick-look” button in iOS 12.

Creating Files

These USDZ files can be easily created using popular software such as Adobe’s Creative Cloud, Autodesk, and Sketchfab. These companies introduced the use of their software with this new file format during WWDC18.

Conclusion

As you can see, Apple has made several improvements to the ARKit framework, both under the hood and user-end features which developers and users alike can enjoy.

Stay tuned to Envato Tuts+ for more about these topics. In the meantime, check out the documentation links for each of the features mentioned in these articles and try them out for yourself!

Powered by WPeMatico