Machine learning is great, but it can be hard to implement in mobile applications. This is especially true for people without a data science degree. With Core ML, however, Apple makes it easy to add machine learning to your existing iOS app using the all-new Create ML platform for training lightweight, custom neural networks.

At a Glance

What Is Machine Learning?

Machine learning is the use of statistical analysis to help computers make decisions and predictions based on characteristics found in that data. In other words, it’s the act of having a computer parse a stream of data to form an abstract understanding of it (called a “model”), and using that model to compare with newer data.

How Is It Used?

Many of your favorite apps on your phone are likely to incorporate machine learning. For example, when you’re typing a message, autocorrect predicts what you’re about to type next using a machine learning model which is constantly updated as you type. Even virtual assistants, such as Siri, Alexa, and Google Assistant are completely reliant on machine learning to mimic human-like behavior.

Getting Started

Let’s finally use your new-found knowledge about machine learning to actually build your first model! You’ll need to make sure you have Xcode 10 installed, along with macOS Mojave running on your development Mac. In addition, I’ll assume you already have experience with Swift, Xcode, and iOS development in general.

1. Dataset and Images

In this tutorial, we’ll be classifying images based on whether they have a tree or a flower. However, it is recommended that you follow along with your own images and objects you’d like to classify instead. For this reason, you will not be provided with the flower and tree images used in this example.

Finding Images

If you’re having trouble finding images (or if you don’t have enough of your own images), try PhotoDune or Google Images. For the purposes of learning, this should be enough for you to get started. Try to find images which have a distinct main object (e.g. one orange, one tree) instead of multiple (e.g. bunches of bananas, entire forests) to avoid confusing the model to start off with. Of course, you can always add more complications later, after you have some experience.

80:20 Data Science Rule

In the field of data science, it is good practice to divide your datasets into two categories: one for training the model, and one for testing. Since you want to give your actual model the most attention, 80% of your dataset should be used for training, and you should save 20% to make sure everything is working as it should; after all, that’s important too!

Splitting the Difference

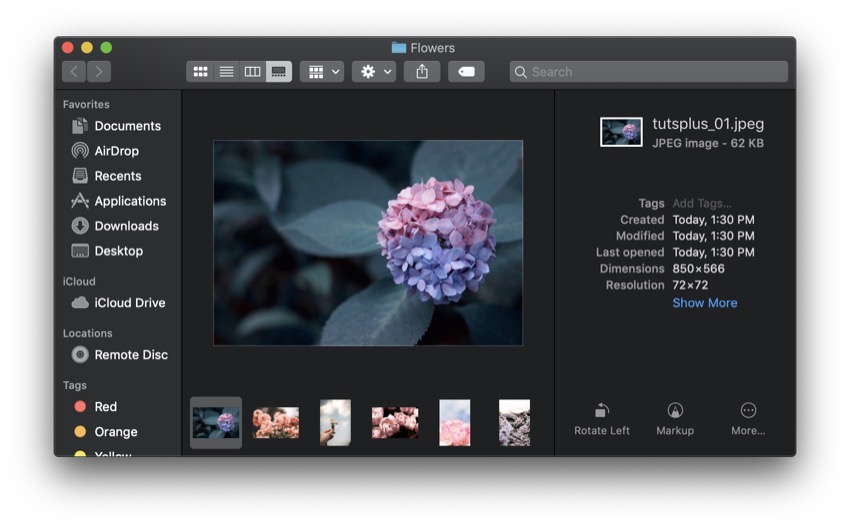

With the 80:20 rule in mind, go ahead and create two folders: Training and Testing, where you’ll put the right amount of images into each of the folders. Once you have 80% of your data in Training and 20% in Testing, it’s finally time to split them up by category. For my example, I’ll have two folders named Flowers and Trees in my Training folder, and I will have 20% of the images unsorted in my Testing folder.

2. Training the Model

So, let’s dive in and actually create the model. You might be surprised to hear that most of your work is already done! Now, all we have left to do is write some code and put Swift and Xcode to work to do the magic for us.

Creating a New Playground

While most of us are used to creating actual iOS applications, we’ll be headed to the playground this time to create our machine learning models. Interesting, isn’t it? If you think about it, it actually makes sense—you don’t need all those extra files but just need a clean slate to tell Swift how to create your model. Go ahead and create a macOS playground to start off.

First, open Xcode.

Then create a new playground.

And give it a useful name.

The Three Lines

Contrary to what you may have thought, you just need three lines of code to get your playground ready to train your model. Delete all of the boilerplate code which is generated automatically, and then do the following:

Import the CreateMLUI API to enable Create ML in your Swift Playground:

import CreateMLUI

Then, create an instance of the MLImageClassifierBuilder and call the showInLiveView(:) method to be able to interact with the class in an intuitive user interface within your playground:

let builder = MLImageClassifierBuilder() builder.showInLiveView()

Great! That’s all you need to do in terms of code. Now, you’re finally ready to drag and drop your images to create a fully functional Core ML model.

Drag and Drop

Now, we’ve developed a user interface where we can start adding our images and watch the magic unfold! As mentioned earlier, I have seven images of flowers and seven images of trees. Of course, this won’t be enough for a super-accurate model, but it does the trick.

When you open the assistant editor, you’ll see a box which says Drop Images To Begin Training, where you can drag your Training folder. After a couple of seconds, you’ll see some output in your playground. Now you’re ready to test your newly made Core ML model.

3. Testing and Exporting

After you’ve finished training your model, it’s easy to test the model and download it to use in your apps. You can test it right in your playground without ever having to create a project. When you know that your model is ready, you can put it in an iOS (or macOS) app.

Testing the Model

Remember the Testing folder you created? Go ahead and drag the entire folder onto your playground (where you dropped your Training images earlier in the tutorial). You should see your images appear in a list, along with what the model thinks each of them is. You might be surprised—even with so little data, you can still get a pretty accurate model.

Downloading the Model

When you’re satisfied with your model, you can export it in the Core ML format and use it in your apps. Next to Image Classifier, go ahead and click the downward facing arrow to reveal some fields which you can alter to change the name, author, or description of your model. You can also choose where to download it.

When you hit the blue Save button, your .mlmodel file will appear in your desired location. If you’re interested, you can also read the output in the playground to learn information such as the precision, recall, and where your model was saved.

Using the Model

This tutorial assumes you’re familiar with Core ML models in general, but I’ll briefly explain how it works. For more information on how to use the model once it’s in your app, you can read my other tutorial:

Machine LearningGet Started With Image Recognition in Core ML

Machine LearningGet Started With Image Recognition in Core ML

To use the model, drag it into your Xcode project (as you would an image or audio file). Then, import Core ML into the file where you’d like to use it. With a few additional steps, you should be able to treat the model like a Swift class and call methods on it as described in my other tutorial.

For more information on how to do this, you can also visit Apple’s documentation and read about how to integrate machine learning into your app.

Conclusion

In this tutorial, you learned how to build a custom image classification neural network easily while only writing three lines of code. You trained this model with your own data, and then used 20% of it to test the model. Once it was working, you exported it and added it to your own app.

I hope you enjoyed this tutorial, and I strongly encourage that you check out some of our other machine learning courses and tutorials here on Envato Tuts+!

Mobile DevelopmentNew Course: Image Recognition on iOS With Core ML

Mobile DevelopmentNew Course: Image Recognition on iOS With Core ML Machine LearningGet Started With Image Recognition in Core ML

Machine LearningGet Started With Image Recognition in Core ML Machine LearningHow to Train a Core ML Model for an iOS App

Machine LearningHow to Train a Core ML Model for an iOS App iOS SDKConversation Design User Experiences for SiriKit and iOS

iOS SDKConversation Design User Experiences for SiriKit and iOS

If you have any comments or questions, please don’t hesitate to leave them down in the section below.

Powered by WPeMatico